My starting point for this project was the work done on the Big Mouth Phatt Bass, my previous fishy project. The microcontroller, motor driver and the fish itself were unchanged; the only real difference is replacing the MP3 player board with an audio amplifier, so that instead of the music coming from a dedicated player, it comes direct from the ESP32. This then allowed me to write software that took a Bluetooth audio input, sent it to the I2S amplifier for playback, and triggered fish movement based on the content of that audio.

I chose the common MAX98357A audio amplifier, originally from Adafruit though I expect mine was a clone. This is well documented online, and the use of the I2S digital standard for audio meant I didn’t have to worry about audio synthesis on the ESP32 or current draw from its pins. All that was required was to grab digital data from Bluetooth and output it as I2S, getting the levels and sample rate correct.

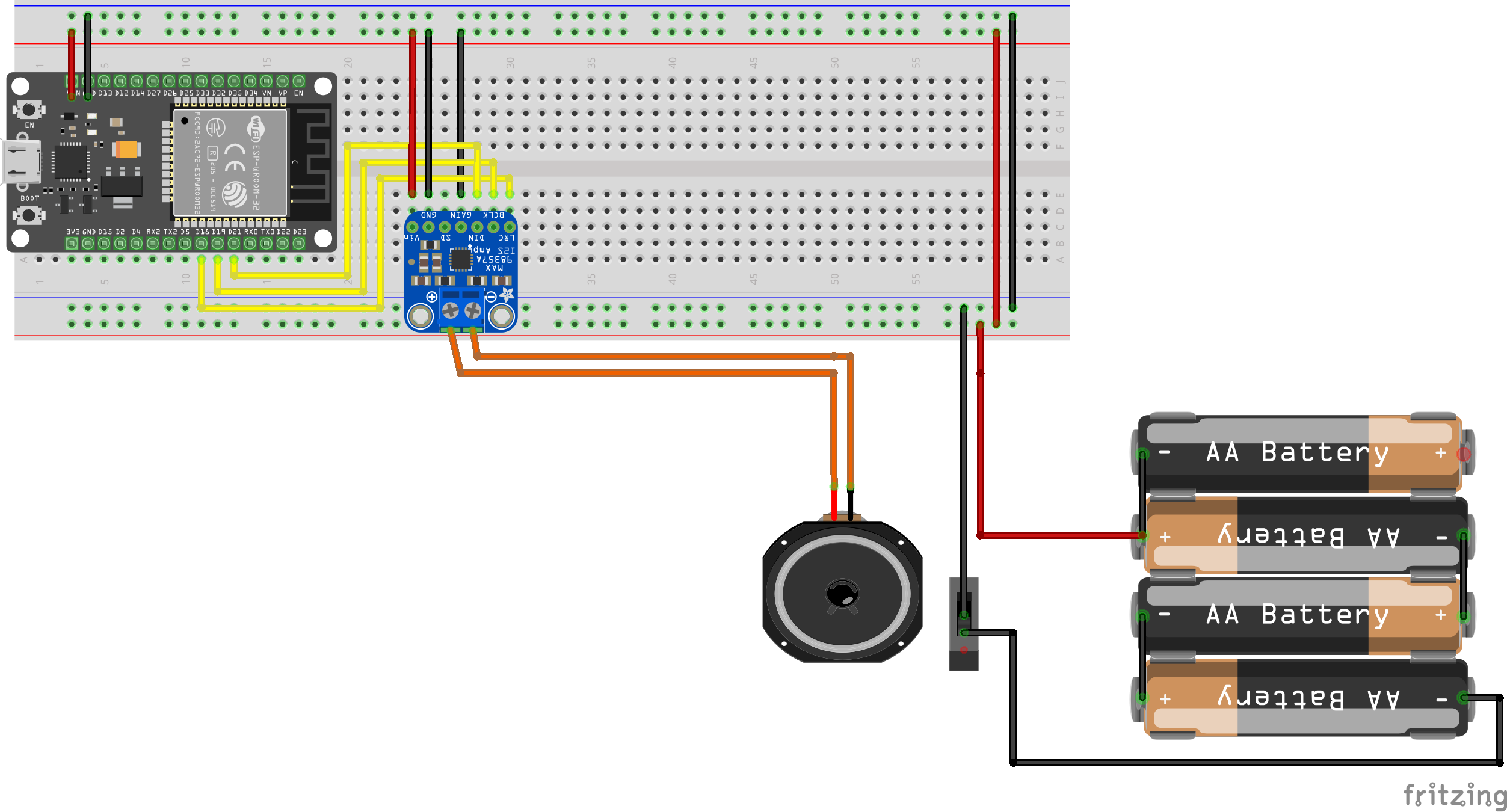

I jumped into some breadboard prototyping, but since the motor control aspect was already well understood, I did not bother with that and only tested the audio amplifier. I set up the breadboard like this:

With reference to this helpful guide, I coded up the ESP32 to behave as a Bluetooth A2DP receiver, which would play the audio it received via I2S using three pins: 18 for WS (left/right channel select), 19 for BCLK (bit clock) and 21 for data.

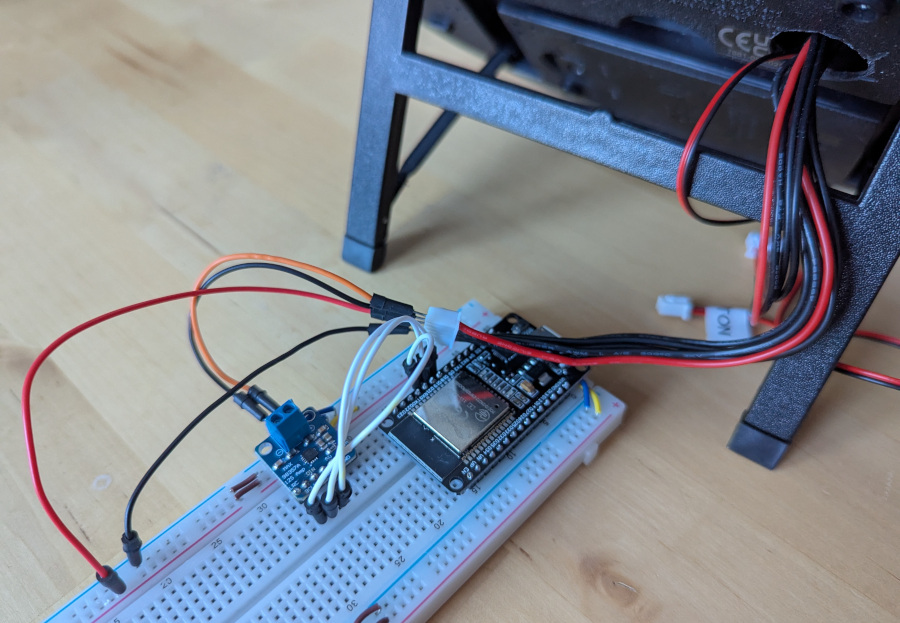

With the fish’s speaker pins connected, this allowed me to play audio from my phone through the fish.

The next step was to trigger motor movement based on the audio. Now the caveat here is that the ESP32’s capability in this regard is very limited. Between the Bluetooth audio reception, the I2S output, and an FFT to detect audio, the device’s program memory is 90% full and I had to split processing across both cores. About all I could manage was to stick the head out and flap the mouth in time to any audio. This means that while the effect is good for voice assistant responses, it’s not great for music.

What I originally wanted to achieve was a fish that did this only for the vocals of a song, and otherwise flapped its tail to the beat. Feel free to play around with FFT bins, levels and buffer sizes if you like, but I suspect this is not achievable with the chosen hardware—detecting vocals in music is a very difficult job. As a future upgrade I may instead try a Raspberry Pi Zero 2W, running webrtcvad or similar software. The rest of the hardware could be adapted to the Pi Zero as well, for example a HiFiBerry DAC and motor driver HAT. I’m not sure yet if this would work, or fit inside the enclosure, or if powering a “real” computer from 4 AA batteries is feasible.

Comments